HOW ASTRA STARTED

Motivation

- Around 90% of car accidents involve human distraction

- The use of autonomous cars in the US could save around $800 billion annually.

- In the US, 6 billion minutes are lost in commuting

- People with disabilities have limited transportation options

SOLUTION

The ASTRA model is an advanced deep-learning system that combines multiple techniques to process and analyze camera data.

Cost Effective & easy to use system

Using only cameras that can be bought at a low cost, we can extract information out of simple data formats such as images and videos.

Passive Sensing

Cameras are passive sensors that simply capture information about their environment, while Radar and LiDAR are active sensors that require special hardware that fires electromagnetic waves at their surroundings to retrieve information that bounces back.

High Resolution Data

Cameras capture high-resolution data without much processing, unlike Radar and LiDAR which require a lot of processing to remove the noise and extract the data about the surroundings. This inevitably leads to the loss of information in our data.

features of

Astra

traffic sign recognition

Train a Convolutional Neural Network to recognize (German) Traffic Signs

Lane Detection

Original Image

Canny Edge Detection

Final Result

Use Canny Edge Detection Algorithm and Hough Transformations to detect the driving lanes

Object detection

Detect and box road objects that our model recognizes

Semantic Segmentation

Maps each pixel to a type of object with a certain class label.

Our model enables us to do this for 19 different classes, some of which are

tree, vegetation, pedestrian, pavement, road, building, traffic sign, car, etc.

Depth Estimation

Left Image

Right Image

Depth Map

Create a depth map based on the differences between images with slightly different angles - similar to how humans do it (stereopsis)

clear path approximation

Approximate the clearest path using semantic segmentation (and depth estimation images if there are two sources of images)

Steering Angle Prediction

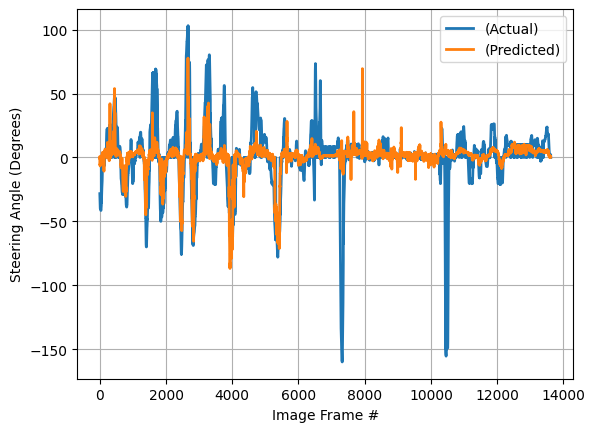

Train a model on a Convolutional Neural Network on parts of a video and predict the steering angle based on the frames for the rest of the video

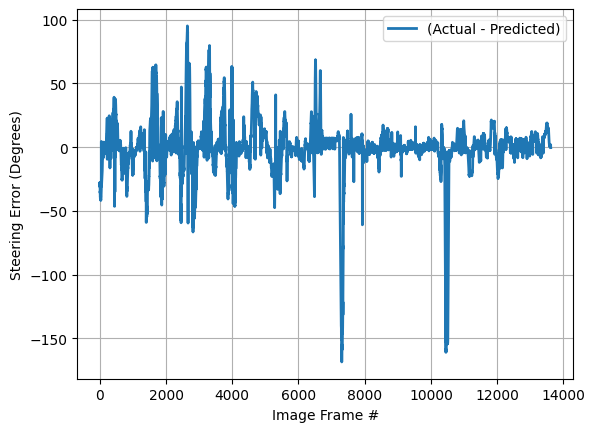

RESULTS: steering angle prediction

analysis

Although an average error of 13-25 degrees does not sound that bad in terms of real-world driving, the plots above seem to show otherwise. Despite the predictions seemingly lining up with the actual steering angles on the first plot, the residual (actual - predicted value) plot above shows how the steering model fails to reduce the error whenever the actual steering angle is very far from 0. In fact, it seems to introduce MORE error to the prediction since the residuals still maintain a similar shape as if the predictions have not gotten close to the actual steering angle at all.

Potential Sources of Error:

- Most of the data that the CNN model was trained on did not have big turns, leading to a low average error, but it fails to predict large turns.

- The left plot DOES hint that the model is working, but there is a time lag in the prediction that leads to a consistent error that results in the residual plot.

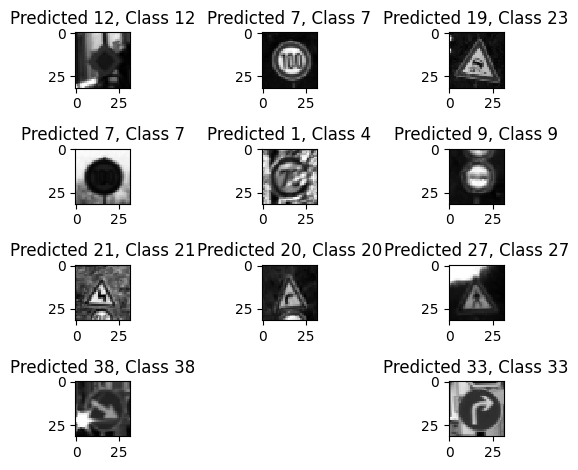

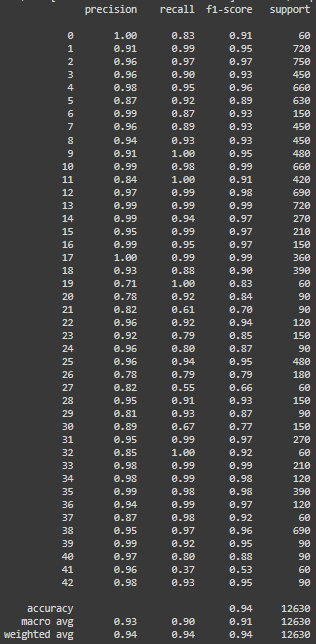

Results: German Traffic sign recognition

Sample Predictions

training results

Loss: Sparse Categorical Crossentropy

Accuracy

The model seems to train pretty well except there seems to be a little bit of overfitting at around the 10th epoch

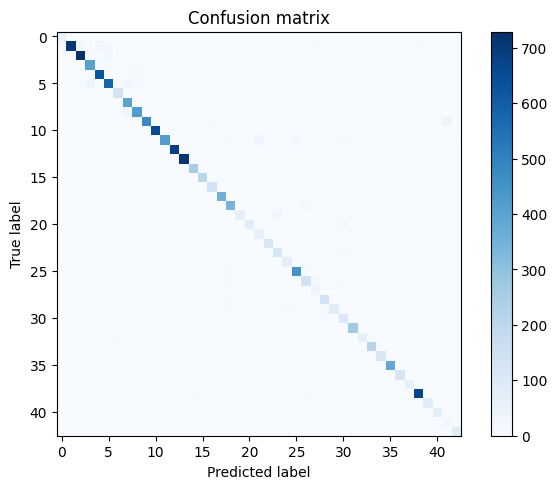

test results

The test results were quite promising, given an accuracy of about 94%, and most of the signs were classified correctly as shown in the confusion matrix